Jobs at Risk, Jobs That Rise: What AI Is Really Coming For

Not a bloodbath—a reclassification: how AI will redefine the value of work.

When I was hired as CIO at Pepperdine University, I stepped into a role shaped by crisis. I inherited a PeopleSoft implementation that was completely off the rails. Technically, the project was intact. It was on budget. The timeline still held. But across the university, the perception was clear: the project was a massive failure.

Not because the servers were down—but because trust had evaporated.

My predecessor had hired brilliant PeopleSoft engineers—some had written the product’s source code. But that deep expertise created a false confidence that success was about the technology. It wasn’t. I brought a sociologist’s lens, re-centered the project around empathy and communication, and made staffing changes to elevate people-first leaders. The turnaround succeeded—because we rebuilt trust.

In today’s dispatch, I want to apply that human-centered lens to the question everyone’s asking now: What does AI mean for the future of work? Beneath the hype and hand-wringing lies a deeper shift: knowledge workers must up their game—in both value and versatility—or risk seeing their roles quickly made obsolete.

The big picture

AI is coming for the workforce. Or so we’re told.

Recently, the CEO of Anthropic warned that half of all entry-level white-collar jobs could disappear in five years. Unemployment could spike to 20%. Entire classes of workers—analysts, paralegals, customer support—gone. The phrase he used was “white-collar bloodbath.”

A day later, CNN pushed back, framing the “white-collar bloodbath” as part of the AI hype cycle. They criticized the warning as a scare tactic—light on data, heavy on drama—timed to promote Anthropic’s latest product. The idea of soaring GDP alongside mass unemployment, they argued, defies basic economics. To them, it wasn’t a sober forecast—it was marketing, wrapped in urgency.

Amidst panic and pushback, a crucial question emerges: which jobs now matter? The story isn’t of sudden collapse or utopian reinvention, but of quiet systemic reclassification. As AI automates routine and accelerates technical, enduring roles will be those rooted in human range: adaptability, interpretation, and leadership.

What AI Actually Changes

Here’s what’s really happening, and we need to say it plainly:

AI doesn’t eliminate jobs evenly—it erodes roles and routines.

The hardest-hit positions aren’t senior—they’re junior, routine, and narrow.

What’s threatened is the career ladder itself—especially the bottom rungs.

And in higher education and the professional world, we are likely dangerously unprepared.

This isn’t about doom; it’s about seeing the shift clearly. The story of AI and work isn’t about sudden destruction; it’s a slower, deeper reclassification. Tasks aren’t just being automated; they’re being reassigned, redistributed, and reframed. Routine, entry-level roles—especially those built around repetition—are quietly eroding, while new responsibilities emphasize oversight, adaptability, and human judgment. The real transformation lies in how value and contribution shift subtly before we’re fully aware.

A Better Frame: The Knowledge Worker Value Map

At Pepperdine, I published the article “Technical Skills No Longer Matter,” to help IT leaders think differently about team maturity. At the time, it was becoming clear that technical proficiency alone wasn’t enough to deliver complex IT projects successfully. What mattered more was the ability to collaborate, adapt, communicate, and lead. The model captured this shift as a progression—from transactional roles focused on execution to transformational roles that shaped outcomes across the institution.

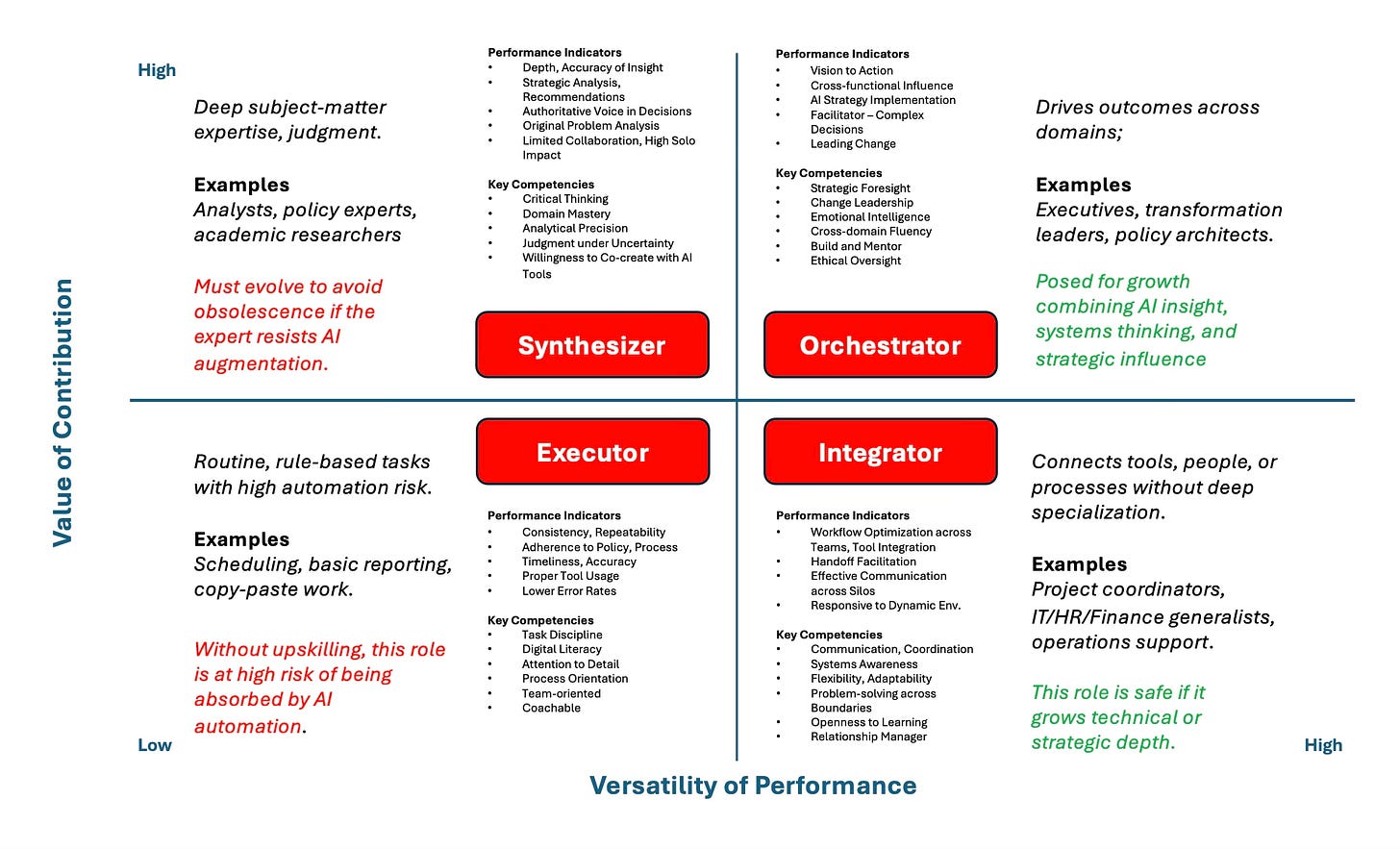

The model was built around two core dimensions that shape the value of work:

Versatility of Performance (Can you adapt, synthesize, collaborate, shift context?)

Value of Contribution (Does your work shape outcomes or just complete tasks?)

We can apply these two core dimensions to illustrate how different knowledge worker roles are impacted by AI, based not on titles or industries, but on how individuals create impact and adapt across contexts.

Whether in law, healthcare, IT, HR, or finance, the same pattern emerges: roles that combine high value and high versatility are thriving, while those lacking in either dimension face increasing risk. Four roles emerge:

Executor

Routine work, highly automatable

Examples: scheduling, formatting, entry-level reporting

AI risk: extreme

Human value: compliance, consistency

Reality: increasingly automated and shrinking—unless redefined to include broader responsibilities, tool fluency, or a pathway to higher-value contributions.

Integrator

Cross-functional glue, not deep expertise

Examples: project coordinators, ops roles, HR/IT generalists

AI risk: moderate

Human value: coordination, systems navigation

Reality: still viable—for those who grow beyond coordination, developing deeper subject matter expertise and the ability to work across domains.

Synthesizer

Deep expertise, low range, individual contributor

Examples: analysts, policy experts, researchers

AI risk: rising

Human value: context, judgment, domain wisdom

Reality: vulnerable—unless deep expertise is paired with cross-domain collaboration and a shift from solo work to team-driven, scalable impact.

Orchestrator

Strategic, adaptive, AI-augmented

Examples: executives, transformation leads, institutional architects

AI risk: low

Human value: alignment, leadership, foresight

Reality: growing in power and necessity—especially for non-executives who lead by aligning people, tools, and strategy across teams and domains.

What to Do Now

The roles most vulnerable to AI aren’t just technical or administrative. They’re narrowly scoped, routine, and resistant to change. Institutions that fail to respond will find themselves with pipelines full of people trained for work that no longer exists.

For businesses:

Rethink hiring: Stop filling yesterday’s roles. Start mapping talent to future value.

Build for range: Prioritize adaptability, collaboration, and AI fluency over narrow specialization.

Redesign work: Don’t just automate tasks—reimagine what human contribution looks like.

For higher education:

Drop the entry-level mindset: Stop preparing students for jobs that are vanishing.

Embed AI across the curriculum: Not just in electives—make it foundational.

Emphasize synthesis, not just skill: Design capstones around orchestration and judgment.

Grow integrators and orchestrators: Build programs that develop versatility, not just expertise.

This isn’t future-proofing. It’s present-proofing. Institutions that fail to evolve won’t just fall behind—they’ll train a generation for roles that no longer matter.

The bottom line

Yes, some jobs are vanishing—especially those built on routine, specialization, or hierarchy. But others are rising in value: roles that demand judgment, emotional intelligence, clear communication, and the ability to connect across systems and teams. These aren’t jobs that compete with AI—they’re jobs that make AI useful.

The future won’t belong to the most technical or even the most knowledgeable. It will belong to those who can adapt, synthesize, and lead through change. Not just smart, but versatile. Not just trained, but transformational.

It’s not about being safe from AI. It’s about being necessary alongside it.

Let’s help our people—and our institutions—move to the right side of the value map.

Hi Tim,

This was a timely dispatch. Just yesterday, I read a piece by Tom Soderstrom at AWS, noting that disruption is now occurring 50X faster than it has over the past 15 years. That idea stayed with me—and was reinforced again this morning during a conversation with my client, Bob Brower, former President of Point Loma Nazarene University. He shared his view that most institutions in higher education are not equipped to adapt at the pace today’s environment demands.

I had a question about your Knowledge Worker Map—it seems aligned with the classic People Skills quadrant model. Do you see the same parallels, or am I reading too much into it? To me, it seems certain personalities are more naturally wired for experimentation—and some move faster than others. I'd love to hear how you see it.

Lead boldly,

Hugh